I’m Andrew Thaler and I build weird things.

Last month, while traveling to Kuching for Make for the Planet Borneo, I had an idea for the next strange ocean education project: what if we could use bone-conducting headphones to “see” the world like a dolphin might through echolocation?

Bone-conducting headphones use speakers or tiny motors to send vibrations directly into the bone of you skull. This works surprisingly well for listening to music or amplifying voices without obstructing the ear. The first time you try it, it’s an odd experience. Though you hear the sound just fine, it doesn’t feel like it’s coming through your ears. Bone conduction has been used for a while now in hearing aids as well as military- and industrial-grade communications systems, but the tech has recently cropped up in sports headphones for people who want to listen to music and podcasts on a run without tuning out the rest of the world. Rather than anchoring to the skull, the sports headphones sit just in front of the ear, where your lower jaw meets your skull.

This is not entirely unlike how dolphins (and at least 65 species of toothed whales) detect sound.

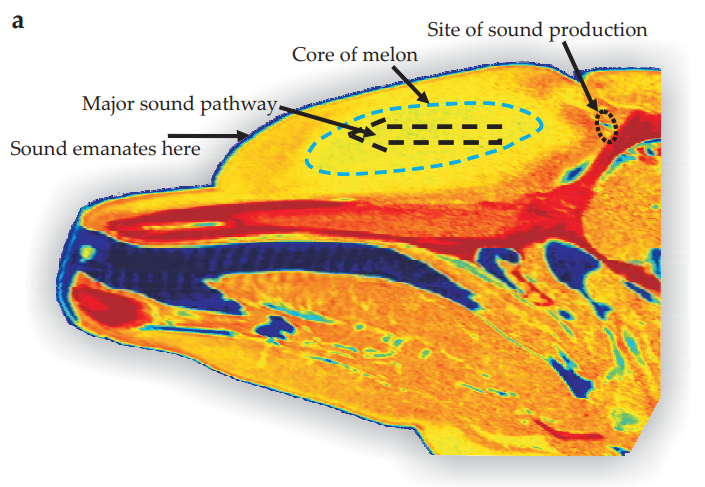

Dolphins, like bats, have a biosonar system that allows them to detect objects through echolocation. That big, bulbous head of theirs is filled with acoustic fat, a dense lipid that allows them to focus sound. Those sounds are created by a vocal structure under their blowhole called the dorsal bursae–monkey lips complex and honestly I’m only going into so much detail here because of how great that name is. Ultrasonic clicks travel from the monkey lips complex through the acoustic fat-filled melon, and emanates out the front of the melon. Those clicks then bounce off objects in the ocean and return to the dolphin, who somehow translates that information into spatial awareness.

Incidentally, we only confirmed that dolphins can echolocate in the 1960’s, which feels really late to me considering that barely 30 years later we were already making video game for kids as if it were universal common knowledge.

Here’s the weird thing, though. Dolphins don’t have ears.

At least not externally. Dolphins are highly streamlined for fast travel through seawater and exposed, external ears would not only create drag but would produce cavitation and turbulence that could actually interfere with sound detection. Dolphins are, among other things, extreme audiophiles. So how do they hear? Nestled in their lower jaws is that same acoustic fat found in their big heads. Their lower jaws interface with the structures in their inner ear, allowing sound waves to travel up their jaw and into their ears. Dolphins “hear” with their jaws.

For a really good overview of dolphin (and bat) biosonar, check out Au and Simmons (2007) Echolocation in Dolphins and Bats. DOI: 10.1063/1.2784683. It’s not open access, but there’s probably some sort of Hub for Science where you could easily find a copy.

So, consumer bone-conducting headphones that sit at the interface of your jaw and skull is a pretty good proxy for how dolphins receive sound, but how do we produce it?

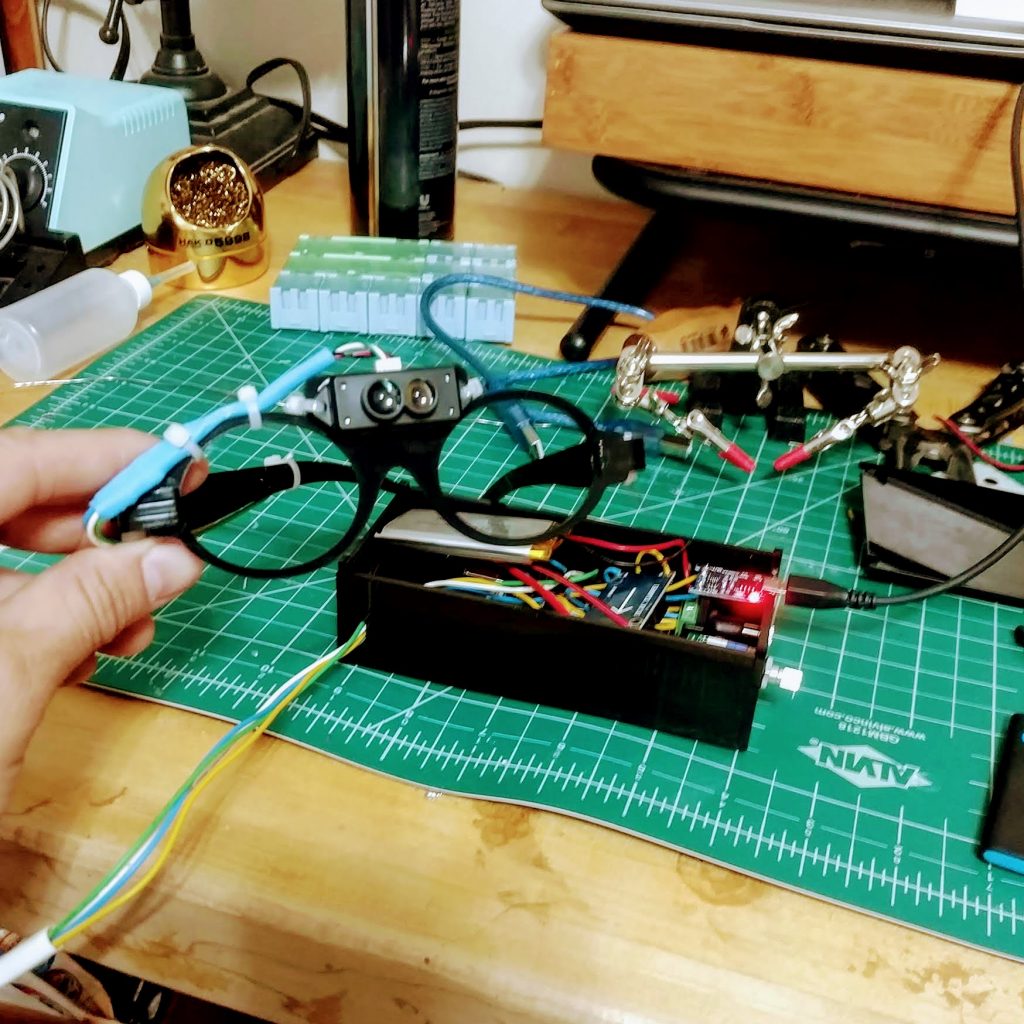

The ubiquitous ultrasonic rangefinder that seems to come packaged in every Arduino kit was the first obvious answer. Dolphins use ultrasound, so why shouldn’t we start there? After a bit of tinkering, I realized that the device has a major limitation: it’s range is tiny; barely a meter on a good day with perfect conditions. Long range units get exponentially more expensive and consume increasingly large amounts of power. I wanted a head-mounted dolphin-inspired echolocation system that was compact!

Thanks to the consumer drone movement, cheap time-of-flight LiDAR units (not true LiDAR systems that use laser reflection, but focused infrared emitters that do almost the same thing, but cheaper) are increasingly more available. These modules are compact, have a low power draw, interface easily with Arduino, and have a 12m operating range. Perfect! It’s not ultrasound, but it is a pretty good proxy for what I’m trying to build.

Finally, I harnessed the power of the Glowforge to cut the housing and head-mount from some black acrylic, combined a slew of extra components for charging and driving the audio signal to a pair of headphones, bashed out a quick and dirty bit of code to get everything talking, strapped a LiDAR unit to a pair of lasercut glasses with a mounting bracket, and DolphinView was born!

Don’t worry, it’s all open-source and ready to build. The shapefiles, bill of materials, code, and associated documentation is all on GitHub as well as Thingiverse.

- GitHub: DolphinView – A LiDAR system to convert distance measurements into signal pulses in bone-conducting headphones.

- Thinigiverse: Head-mounted LiDAR array that communicates through bone conduction.

Taking the system on a test drive through our local park was a weird experience. Having full hearing plus a constant drum beat of clicks alerting me to the closeness of whatever object was directly ahead of me was a bit surreal and it took a while to get used to the sensory overload. The system is far from perfect and would benefit from the extra processing power you could get from something like a Raspberry Pi so that the audio driver and LiDAR could function independently. Because of how the delay function work, it doesn’t do a great job telling you if something is heading straight towards you at high speeds. It would be interesting to add an ultrasonic rangefinder to let the wearer sense things closer than the LiDAR’s 30cm minimum distance.

Walking around, you definitely do start building up a sense of what all the clicks mean, and with a little practice, you can easily pick out thinks like open doorways with your eyes closed*.

We don’t actually know what dolphins “see” when they project sounds at complex objects. From experiments and models, we know that they can perceive thickness as well as distance from target objects. Without being inside a dolphins brain we have no real way of knowing if they can form complex, 3-dimensional models of the world through biosonar alone or if it acts more like the sweeping pings of a ship’s SONAR.

These kinds of projects are not nearly as daunting as you might think. From conception to realization was less than 2 days (excluding shipping time). The code is barely 15 lines, most of which if cobbled from existing code. The lasercut pieces are nice, but not necessary. The entire build cost less than $100. Documentation took almost as long as actually building the thing.

So get out there and make weird things!

If you’re not sure where to start, Environmental Monitoring with Arduino: Building Simple Devices to Collect Data About the World Around Us is a fantastic book to introduce you to the world of DIY sensor systems.

If you enjoy Southern Fried Science, consider contributing to our Patreon campaign. For just $5 per month, you can support the SFS Writers Fund, which helps compensate your favorite ocean science and conservation bloggers for their efforts. Every dollar helps!

*Look, I know what you’re thinking. Yes, maybe, possibly a much more refined iteration of this could be a useful aid for people with vision impairments. But this is a goofy thing I kludged together in a couple of days to teach kids about dolphins. Throwing random pieces of tech into the ether is far less appealing than just making society and public spaces welcoming and accessible to people of all abilities.

you might get better results if you sent the signals to the tongue via electrical array. these signals go to the brain much faster. it feels a little like pop rocks on the tongue. It’s effective

this is the sort of electrical array i mean:

https://www.researchgate.net/figure/A-BrainPort-device-B-Electric-grid-array-that-sits-on-the-tongue-and-C_fig1_266085607

Does the visually impaired community use anything similar to this?

Maybe you can turn it into a point cloud and then an STL, then you could 3D print random souvenirs you encounter. Or indeed 3D print a tactile thing for blind people. They could then improve their “LIDAR reading” to more accurately see around them.

But what did it sound like? If ever there was an article that could do with an accompanying video, this is it…

The convenience of this solution is that your tongue is free, so you can talk at the same time.