The dissemination of science follows the conventional route of rigorous peer-review followed by publication in an accredited scientific journal. This process has been the standard foundation from which the general public can trust that the science is, at the very least, valid and honest. Of course this system is not without its flaws. Scientific papers of questionable authority, dishonest methodology, or simply flawed design frequently make it through the gates of peer-review. Politically charged papers possess strong biases and many high impact journals favor sexy or controversial topics.

The dissemination of science follows the conventional route of rigorous peer-review followed by publication in an accredited scientific journal. This process has been the standard foundation from which the general public can trust that the science is, at the very least, valid and honest. Of course this system is not without its flaws. Scientific papers of questionable authority, dishonest methodology, or simply flawed design frequently make it through the gates of peer-review. Politically charged papers possess strong biases and many high impact journals favor sexy or controversial topics.

Beyond the conventional route of peer-review, there exist a vast accumulation of gray literature – conference reports, technical notes, institutional papers, various articles written for specific entities that enter into general circulation without the filter of peer-review. Much of gray literature is valid, robust science, but much of it is not. The challenge is that sometimes gray literature is the only science available.

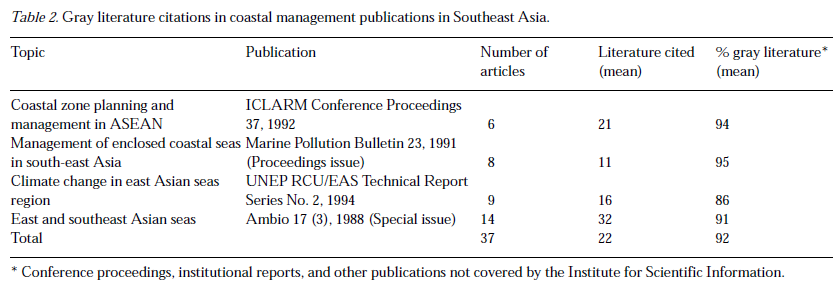

Especially in fisheries, where policy and management must be made on the temporal scale of a fishing season, gray literature is heavily relied upon. Peer-review can take upwards of a year from submission to publication, at which point stock assessments and management proposals may already be out of date. for conservation issues that are time-sensitive, gray literature may be the only option. Even scientific papers in certain marine fisheries rely heavily on gray literature. Some journals have been found to cite up to 94% gray literature.

A perfect example of this recently came across my inbox. In A preliminary investigation of smooth dogfish (Mustelus canis) at-sea processing techniques, a policy-driven report funded by the Pew Charitable Trust, the authors attempt to answer a practical question – “Does smooth dogfish meat spoil quickly if not processed immediately after landing?” Though seemingly mundane, this question has major implications for shark conservation. Many fishermen believe that, post-mortem, smooth dogfish meat spoils more rapidly than other shark meat, and that the catch must be bled and finned before nitrogenous waste builds up in the tissue and ruins the catch. Smooth dogfish are finned at sea, before the boat reaches port. Because a national ban on all finning would allegedly prevent smooth dogfish fishermen from processing their catch before it spoils, the Shark Conservation Act is currently pigeonholed in the Senate. As it stands, this one piece of gray literature is the only report that counters political claims that a ban on finning will hurt smooth dogfish fishermen.

So what are scientists and policymakers to do? Shark conservation is a pressing issue and every month counts. Without a national ban on shark finning, the United States lacks the political authority to influence other nations to adopt a fin ban. On the flip side, if a national fin ban really does negatively impact one of the few shark fisheries that might actually have a chance of becoming sustainable, then the law does not accurately reflect the current state of conservation. Waiting for solid peer-review before any action is taken may result in still more shark population collapses as the remaining fin fisheries continue, unopposed. Time is of the essence and the pace of peer-review is such that many fisheries papers emerge only as epitaphs. On the flip side, making policy decisions based on preliminary, incomplete data is equally rash and could result in a complete reversal of the ban as more data becomes available, compromising a national ban.

When gray literature is the only option, it cannot be ignored. Although limited, this one report does begin to point us towards an answer. It is absolutely necessary to recognize that this is a policy-driven piece with a specific goal and specific biases. While formal peer-review may not be an option, the vast network of ocean science bloggers provides one of several opportunities for vigorous, public discussion among scientist from relevant fields and the chance for interested parties to engage and ask questions.

This is not to say that gray literature should be elevated to the same status as peer-review, but rather that it should not be completely ignored. The precautionary principle dictates that if if an action has a suspected risk of causing harm to the public or to the environment, in the absence of scientific consensus that the action or policy is harmful, the burden of proof that it is not harmful falls on those taking the action. Here we see the first indication that finning dogfish at sea is not necessary, shifting the burden of proof on those claiming that finning dogfish at sea is necessary.

But of course, the final word can never be gray literature. This report is a preliminary study, designed to launch a research project to determine through rigorous, controlled observation and experimentation. But, in the absence of evidence to the contrary, and provided there are not serious questions about the quality of the gray literature, precaution should be taken. Any initial judgment based on gray literature must be made with the caveat that policy will be re-examined post-peer-review.

When time is of the essence and peer-review is not available, gray literature cannot be ignored. But when gray literature is the only option for informing management decisions it must be subject to rigorous and public scrutiny, and any policy that emerges from it must be subject to the caveat that it will be re-addressed as more data becomes available.

~Southern Fried Scientist

UPDATE: David weighs in with some background on urea and osmoregulation in sharks.

UPDATE 2: See here for Chuck’s analysis of the gray paper at Ya Like Dags.

Flor Lacanilao (1997). Continuing problems with gray literature Environmental Biology of Fishes (49), 1-5

Dear SFS,

I read your article on grey literature with great interest, especially as it dealt with peer review. Next month, I will be presenting the results of research on this topic at the Twelfth International Conference on Grey Literature, Prague, 6-7 December 2010,

http://www.textrelease.com/gl12program.html

ABSTRACT

Now that grey literature is readily catalogued, referenced, cited, and openly accessible to subject based communities as well as net users, the claims that grey literature is unpublished or non-published have sufficiently been put to rest. However, now that grey literature has met these former challenges and entered mainstream publishing, it requires in the spirit of science to have a system in place for the quality control of its content. This new challenge has recently been spurred by the IPCC affaire involving the use/misuse of grey literature and is now almost a daily topic in the world media.

The purpose of this study will then be to explore the degree to which grey literature is reviewed and to compare similarities and differences with formal peer review carried out in various degrees by commercial publishers. And, as such it can be seen as basic or fundamental research. This study will further distinguish the review process implemented by grey publishers from that of mavericks and vanity press, where personal opinion and pure speculation run rampant.

The method involves a review of the literature on peer view and its subsequent adaptation in the field of grey literature. Key concepts and elements in peer review will form the framework for our comparative analysis.

Ultimately, in this attempt to make the review process in grey literature more transparent to a wider public, our study will conclude with a checklist, guidelines, or recommendations for best/good practice as well as the design of an empirical survey that would produce further quantitative results, thus enabling a clearer description and explanation of the (peer) review process in grey literature.

Thanks for commenting. Sounds like a fascinating talk. Is there any chance of it being recorded or videolinked (thus becoming gray literature itself)?

You may want to check back in later today for an update, we’ve asked a smooth dogfish researcher to review this particular piece of gray literature, which will be posted over at our sibling site – yalikedags.southernfriedscience.com.

Hi Andrew,

Learning a bit about Bayesian approaches to statistics has made me wonder whether sometimes we pig-headedly deny ourselves valuable information because it doesn’t meet certain gold standards we set ourselves in the world of science. I run into this a lot with our whale shark work, where we’re dealing with somewhere between n=2 and n=6, which is barely enough to establish a group average, let alone a trend. But you know what? Its n=6 more than anyone else has, so it seems silly to ignore the information because its limited. No, for that, and for the greay literature, its my opinion that “qualified information” can and should be used judiciously, AS LONG AS THE LIMITATIONS ARE RECOGNISED AND DISCUSSED OPENLY. Also, in these days of online repositories of supplemental information, it seems that there’s an opportunity for authors to lodge a particularly key piece of grey literature in the supplemental info. Some would regard this as a backdoor publication or an end-run around peer review, but I don’t think so, and it would certainly serve to make valuable data more widely available, at least for debate, if not for decision making.

Cheers,

Al

I’m totally with you on that. Until recently deep-sea biology has been shackled by low sample sizes. I remember once seeing a talk on the sedimentation rate in the Pacific Ocean based on 2 data points (granted those two points cost hundreds of thousands of dollars to collect). At some point you have to acknowledge the limitation of your data set while still making it as publicly available as possible and prudent. Who knows, maybe there’s enough data out there to pool?

I do disagree with sneaking gray lit into supplemental, materials though. To me that obscures the weakness of the data by giving it the veneer of peer review. I’d much rather see something like universities, or even just lab groups, producing their own technical reports, complete with caveats and limitations laid bare. That would also serve as a nice annual review type document, provided you weren’t worried about being scooped.

Besides personally hosting the data, I don’t see that there’s much to do. It might be helpful if it was more okay to ask people directly for unpublished data, and for scientists to make it available (as opposed to withholding it because they don’t want to give away something that isn’t yet and may never be reviewed and published.) Perhaps the situation will get better as more types of publishing become respected enough to “count” towards one’s scientific cred. If your institution and your peers won’t give you respect and acknowledgement (and may even look down on you) for sharing data in a certain way, there has to be a very compelling reason to shoulder this burden.

On the flip side, although peer-review as a way of distributing information often leaves things out, we need to keep it around and (strictly) enforce its boundaries to keep the quality of scientific information from degrading.

Getting scooped probably isn’t as big an issue in ecological/behavioral work as it is in, for example, chemistry or physics. If data collection takes a year and data analysis another year (which is conservative for a lot of projects in psychology and I suspect in oceanography), by the time you had (even grey) data to publish, it would be nigh impossible for somebody to over-take you procedure-wise even if they decided to steal your ideas.